前提

该实战基于假设了解并清楚,ServiceAccount,ClusterRole,ClusterRoleBinding等

以下是简要介绍:

- ServiceAccount:为Pod提供了一个身份,允许Pod与Kubernetes API进行安全交互。Local Path Provisioner需要访问和管理PV、PVC、Nodes等资源

- ClusterRole:定义了一组权限,允许某个身份(如ServiceAccount)对Kubernetes集群中的资源执行特定操作。

- ClusterRoleBinding:ClusterRoleBinding将ClusterRole与一个或多个主体(如ServiceAccount)绑定,以便这些主体获得ClusterRole定义的权限

目标

手撕StorageClass用rancher的local-path的方式进行PVC申领动态制备PV

简要步骤

- 建立命名空间进行资源隔离

- 创建ServiceAccount、ClusterRole和ClusterRoleBinding(前提解释)

- 创建deploy,控制pod副本数量

- 创建configMap,存储不含机密信息的配置数据。(参考:存储基础)

- 创建StorageClass(参考第4条)

废话不多说@#¥%……&*实战开始

namespace

kubectl create namespace(ns) local-path-storage

|

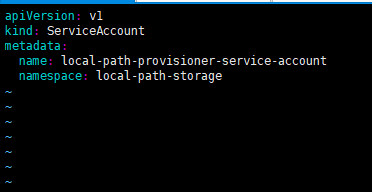

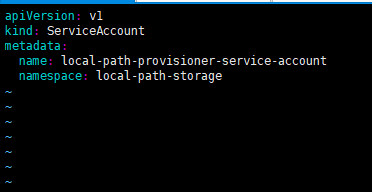

ServiceAccount

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

|

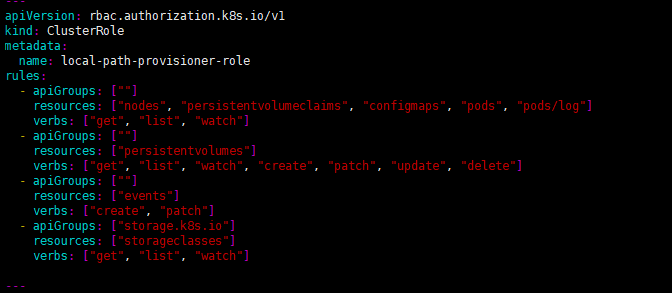

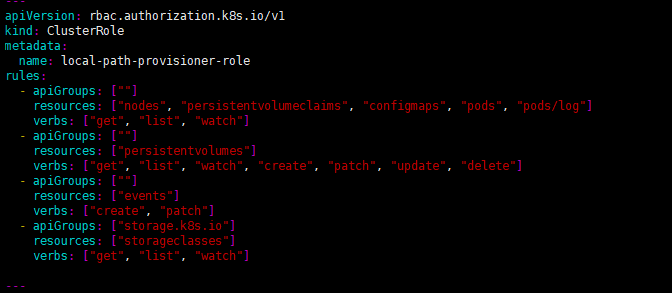

ClusterRole,直接拿官方的

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [""]

resources: ["nodes", "persistentvolumeclaims", "persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "watch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: ["batch", "extensions"]

resources: ["jobs"]

verbs: ["get", "list", "watch", "create", "delete"]

|

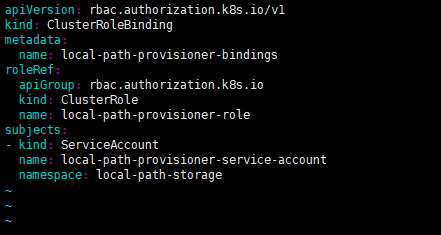

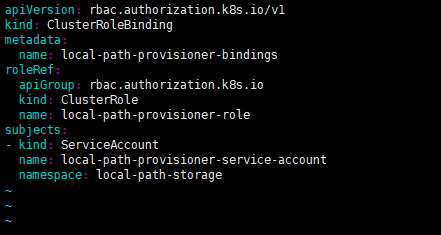

ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bindings

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

|

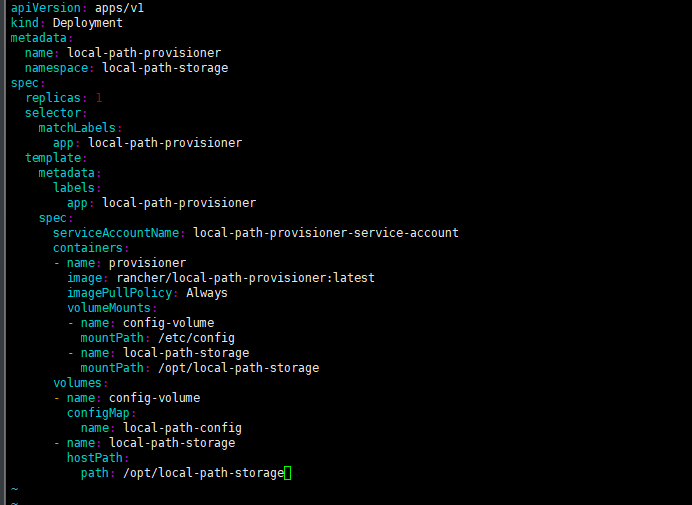

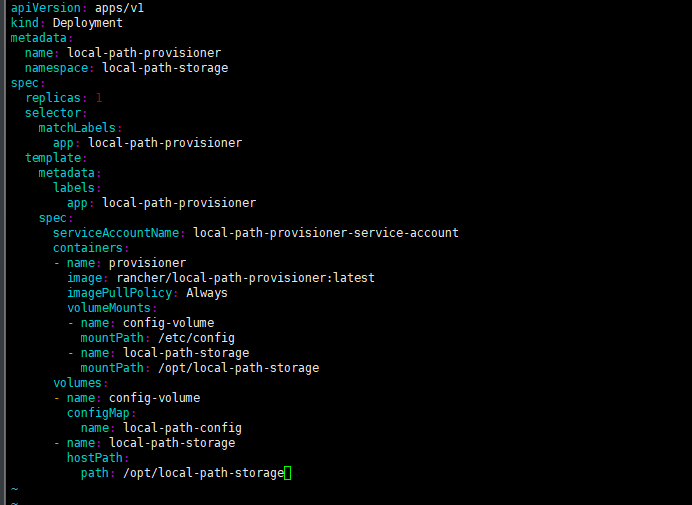

Deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: provisioner

image: rancher/local-path-provisioner:master-head

imagePullPolicy: Always

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: local-path-storage

mountPath: /opt/local-path-storage

volumes:

- name: config-volume

configMap:

name: local-path-config

- name: local-path-storage

hostPath:

path: /opt/local-path-storage

|

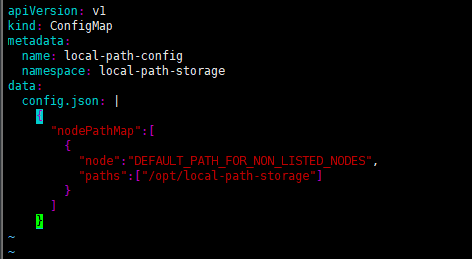

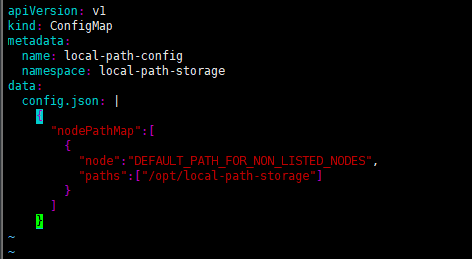

config

apiVersion: v1

kind: ConfigMap

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/opt/local-path-storage"]

}

]

}

|

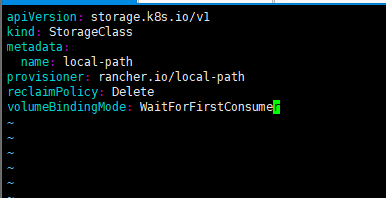

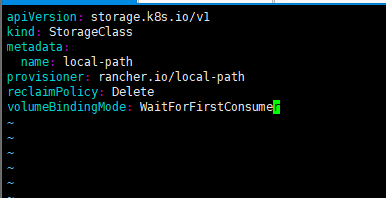

StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

provisioner: rancher.io/local-path

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

|

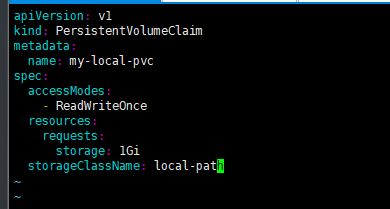

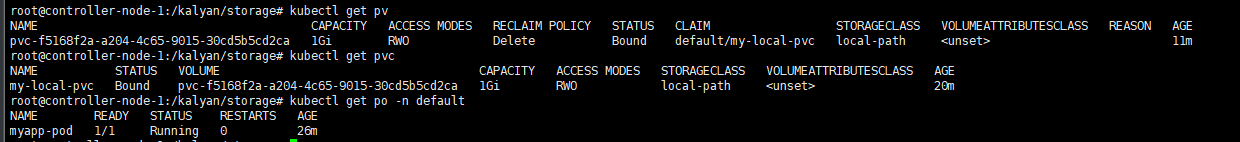

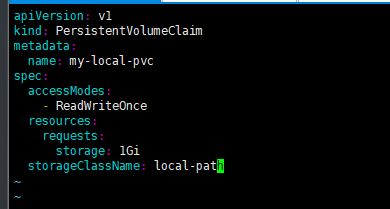

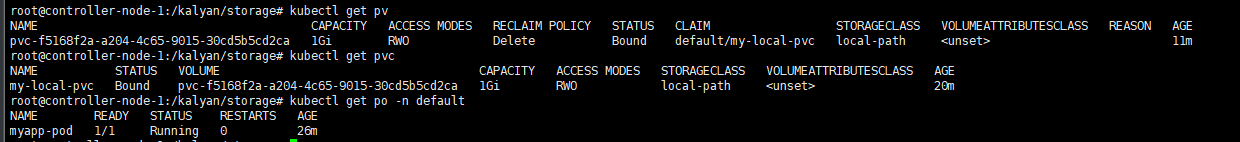

验证

创建pvc yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-local-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: local-path

|

因为设计的是WaitForFirstConsumer,所以需要设计一个pod

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

spec:

containers:

- name: myapp-container

image: m.daocloud.io/docker.io/library/nginx

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: my-local-storage

volumes:

- name: my-local-storage

persistentVolumeClaim:

claimName: my-local-pvc

|

总结

以上的配置文件只是一个参考,实际的可以用官方的配置文件,需要注意的是换加速镜像地址时候要换全哦,带image的都换。配置不正确多半进入cashloopbackoff的问题。

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: local-path-provisioner-role

namespace: local-path-storage

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "watch", "create", "patch", "update", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [""]

resources: ["nodes", "persistentvolumeclaims", "configmaps", "pods", "pods/log"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "patch", "update", "delete"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: local-path-provisioner-bind

namespace: local-path-storage

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: local-path-provisioner

image: docker.m.daocloud.io/rancher/local-path-provisioner:master-head

imagePullPolicy: IfNotPresent

command:

- local-path-provisioner

- --debug

- start

- --config

- /etc/config/config.json

volumeMounts:

- name: config-volume

mountPath: /etc/config/

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: CONFIG_MOUNT_PATH

value: /etc/config/

volumes:

- name: config-volume

configMap:

name: local-path-config

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

provisioner: rancher.io/local-path

volumeBindingMode: Immediate

reclaimPolicy: Delete

---

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/opt/local-path-provisioner"]

}

]

}

setup: |-

set -eu

mkdir -m 0777 -p "$VOL_DIR"

teardown: |-

set -eu

rm -rf "$VOL_DIR"

helperPod.yaml: |-

apiVersion: v1

kind: Pod

metadata:

name: helper-pod

spec:

priorityClassName: system-node-critical

tolerations:

- key: node.kubernetes.io/disk-pressure

operator: Exists

effect: NoSchedule

containers:

- name: helper-pod

image: m.daocloud.io/docker.io/library/busybox

imagePullPolicy: IfNotPresent

|

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)

.jpg)